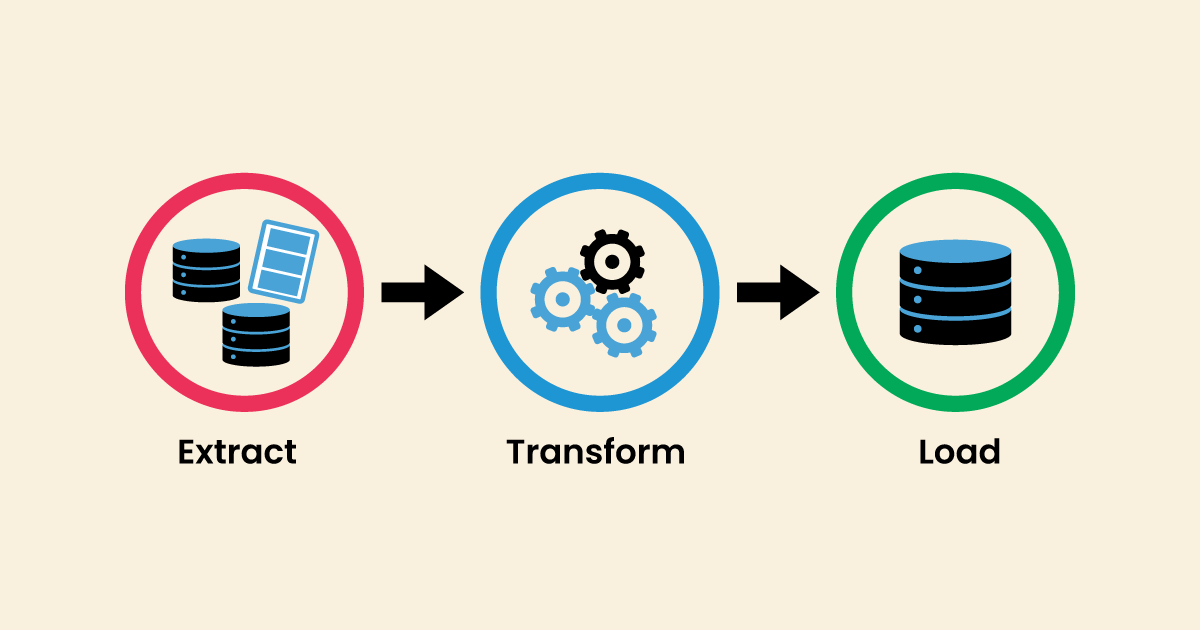

ETL, an acronym for “Extract, Transform, and Load,” delineates a suite of procedures aimed at retrieving data from one system, refining it, and subsequently depositing it into a designated repository. An ETL pipeline embodies a conventional form of data conduit, orchestrating the purification, enhancement, and conversion of data from diverse origins before amalgamating it for utilization in data analytics, business intelligence, and data science endeavors.

During the burgeoning era of databases in the 1970s, ETL emerged as a pivotal process for harmonizing and ingesting data, facilitating computational analysis, and gradually evolving into the primary methodology for processing data in data warehousing initiatives.

How does Extract Transfer Load work?

ETL forms the bedrock of data analytics and machine learning initiatives. Employing an array of business logic, ETL purifies and structures data in a manner tailored to meet certain business intelligence requisites, such as monthly reporting. Yet, its capabilities extend beyond, encompassing more intricate analytics that can refine backend processes or enrich end-user interactions. Organizations frequently leverage ETL to:

- Extract data from legacy systems.

- Cleanse data to enhance data integrity and establish uniformity.

- Load data into a designated target database.

Why Leverage ETL?

ETL assumes paramount significance in contemporary organizational landscapes due to the proliferation of both structured and unstructured data from diverse origins, including:

- Customer data sourced from online payment gateways and customer relationship management (CRM) platforms.

- Inventory and operational data obtained from vendor systems.

- Sensor-generated data stemming from Internet of Things (IoT) devices.

- Marketing insights gleaned from social media platforms and customer feedback channels.

- Employee records managed within internal human resources systems.

Through the application of the extract, transform, and load (ETL) methodology, raw datasets undergo a transformation, rendering them in a format and structure that is conducive to analytical exploration. This preparatory process engenders data sets that are more amenable to analysis, thereby yielding insights of greater depth and significance.

For instance, online retailers can harness ETL to scrutinize data gleaned from point-of-sale transactions, facilitating the prediction of demand patterns and optimization of inventory management strategies. Similarly, marketing teams can employ ETL to amalgamate CRM data with customer feedback sourced from social media platforms, enabling a nuanced examination of consumer behavior and preferences. Thus, ETL serves as the linchpin for unlocking actionable insights from the vast reservoirs of data at organizations’ disposal, empowering informed decision-making and strategic foresight.

Enhancing Business Intelligence through ETL Processes

Extract, Transform, and Load (ETL) stands as a pivotal driver in fortifying business intelligence and analytics, fostering reliability, precision, granularity, and efficiency within the operational framework.

Historical Context Enrichment

ETL injects profound historical context into an organization’s data repository. By seamlessly amalgamating legacy data with inputs from contemporary platforms and applications, enterprises gain access to a comprehensive timeline of data evolution. This juxtaposition of older datasets with recent information affords stakeholders a panoramic view of data dynamics over time, facilitating informed decision-making and strategic planning.

Unified Data Perspective

A cornerstone of ETL’s contribution to business intelligence lies in its provision of a consolidated data perspective conducive to in-depth analysis and reporting. The intricacies of managing disparate datasets often entail significant time investments and coordination efforts, potentially leading to inefficiencies and bottlenecks. ETL circumvents such challenges by harmonizing databases and diverse data formats into a singular, cohesive framework. This data integration process not only enhances data quality but also streamlines the laborious tasks associated with data movement, categorization, and standardization. Consequently, the synthesized dataset becomes more amenable to comprehensive analysis, visualization, and interpretation, empowering stakeholders with actionable insights derived from voluminous datasets.

Precision in Data Analysis

ETL engenders heightened precision in data analysis, crucial for upholding compliance and regulatory standards. Integration of ETL tools with data quality assessment mechanisms enables thorough profiling, auditing, and cleansing of data, ensuring its integrity and reliability. By instilling confidence in the veracity of data assets, ETL reinforces the foundation upon which strategic decisions and regulatory compliance measures are anchored.

Automation of Repetitive Tasks

A hallmark of ETL’s efficacy lies in its capacity to automate repetitive data processing tasks, thereby fostering efficiency in analysis workflows. ETL tools streamline the arduous data migration process, empowering users to configure automated data integration routines that operate periodically or dynamically. This automation liberates data engineers from the drudgery of manual data manipulation tasks, allowing them to channel their efforts towards innovation and value-added initiatives.

ETL serves as a catalyst for elevating business intelligence endeavors, imbuing them with reliability, precision, and efficiency requisite for navigating the complexities of modern data landscapes.

The ETL Evolution

The evolution of Extract, Transform, and Load (ETL) methodology has been profoundly influenced by the shifting paradigms of data storage, analysis, and management, reflecting the dynamic landscape of technological advancements and organizational imperatives.

Traditional ETL Landscape

Rooted in the advent of relational databases, traditional ETL practices emerged to address the challenges posed by transactional data storage formats. Initially, data repositories structured as tables facilitated storage but impeded analytical endeavors. Imagine a spreadsheet with rows representing individual transactions in an e-commerce system, containing redundant entries for recurring customer purchases throughout the year. Analyzing trends or identifying popular items amidst this data deluge proved arduous due to duplication and convoluted relational links.

To mitigate such inefficiencies, early ETL tools automated the conversion of transactional data into relational formats, organizing disparate data elements into interconnected tables. Analysts leveraged query capabilities to discern correlations, patterns, and insights embedded within the relational schema, thereby enhancing analytical precision and efficiency.

Modern ETL Dynamics

In tandem with the proliferation of diverse data types and sources, modern ETL frameworks have undergone a metamorphosis, adapting to the exigencies of contemporary data landscapes. The advent of cloud technology has heralded the emergence of expansive data repositories, colloquially termed as “data sinks,” capable of accommodating vast volumes of data from multifarious sources. Modern ETL tools have evolved in tandem, exhibiting heightened sophistication to interface seamlessly with these diverse data sinks, transcending legacy data formats to embrace contemporary standards.

Examples of Modern Data Repositories

Data Warehouses: Serving as centralized repositories, data warehouses house multiple databases, each organized into structured tables delineating various data types. Leveraging diverse storage hardware configurations, including solid-state drives (SSDs) and cloud-based storage solutions, data warehouse software optimizes data processing capabilities, facilitating streamlined analytics workflows and informed decision-making.

Data Lakes: Representing a paradigm shift in data storage, data lakes offer a unified repository accommodating structured and unstructured data at scale. Unlike traditional repositories, data lakes eschew upfront data structuring, allowing for the ingestion of raw data without predefined schema constraints. This flexibility empowers organizations to conduct a spectrum of analytics, encompassing SQL queries, big data analytics, real-time insights, and machine learning algorithms, thereby fostering data-driven decision-making and innovation.

The evolution of ETL methodology reflects a relentless pursuit of efficacy and adaptability, aligning with the evolving needs and aspirations of organizations traversing the intricate realms of data analytics and management in the digital age.

How does Data Extraction work?

Data extraction within the context of extract, transform, and load (ETL) operations involves the retrieval or copying of raw data from diverse sources, which is subsequently stored in a designated staging area. This staging area, often referred to as a landing zone, serves as an intermediate storage space for temporarily housing the extracted data. Typically transient in nature, the contents of the staging area are purged once the data extraction process concludes, although certain instances may necessitate retaining a data archive for diagnostic or troubleshooting purposes.

The frequency at which data is transmitted from the data source to the target data repository hinges upon the underlying change data capture mechanism. Data extraction typically occurs through one of the following methodologies:

Update Notification: In this approach, the source system proactively notifies the ETL process of any alterations to data records. Subsequently, the extraction process is triggered to capture and incorporate these changes. Many databases and web applications furnish update mechanisms to facilitate seamless integration through this method.

Incremental Extraction: For data sources incapable of providing update notifications, an incremental extraction strategy is adopted. This methodology entails identifying and extracting data that has undergone modifications within a specified timeframe. The system periodically checks for such alterations, be it on a weekly, monthly, or campaign-driven basis, thereby obviating the need to extract entire datasets repeatedly.

Full Extraction: In scenarios where data sources neither offer change identification nor notification capabilities, resorting to a full extraction approach becomes imperative. This method necessitates reloading all data from the source, as there are no mechanisms in place to pinpoint incremental changes. To optimize efficiency, this technique typically involves retaining a copy of the previous extraction, facilitating the identification of new records. However, owing to the potential for high data transfer volumes, it is advisable to restrict the use of full extraction to smaller datasets.

Understanding Data Transformation

In the realm of ETL processes, data transformation stands as a pivotal stage wherein raw data residing within the staging area undergoes comprehensive restructuring and refinement to align with the specifications of the target data warehouse. This transformative phase encompasses a spectrum of alterations aimed at enhancing data quality, consistency, and compatibility. Key facets of data transformation include;

Basic Data Transformation: This category encompasses fundamental alterations aimed at rectifying errors, mitigating inconsistencies, and simplifying data structures. Examples include;

Data Cleansing: Rectifying errors and inconsistencies within the dataset, such as mapping empty data fields to predefined values or standardizing data formats.

Data Deduplication: Identifying and eliminating duplicate records to ensure data integrity and streamline analysis.

Data Format Revision: Standardize data formats, including character sets, measurement units, and date/time values, to establish uniformity across the dataset.

Advanced Data Transformation:

Advanced transformations leverage business rules to optimize data for nuanced analysis and decision-making. Key techniques include;

Derivation: Calculating new data attributes based on predefined business rules, such as deriving profit margins from revenue and expenses.

Joining: Integrating data from disparate sources by linking related datasets based on common attributes, facilitating comprehensive analysis and reporting.

Splitting: Dividing composite data attributes into distinct components, such as parsing full names into first, middle, and last names.

Summarization: Aggregating granular data into concise summaries to facilitate high-level analysis and trend identification, such as calculating customer lifetime value from individual purchase transactions.

Encryption: Safeguarding sensitive data by applying encryption algorithms to ensure compliance with data privacy regulations and mitigate security risks during data transmission.

Through a judicious combination of basic and advanced data transformations, ETL processes refine raw data into a cohesive, standardized format optimized for storage, analysis, and decision-making within the target data warehouse. This transformational journey enhances data integrity, usability, and relevance, thereby empowering organizations to derive actionable insights and unlock the full potential of their data assets.

Understanding Data Loading

Data loading represents the pivotal stage wherein the transformed data residing within the staging area is seamlessly transferred into the designated target data warehouse. Characterized by automation, precision, and continuity, the data loading process adheres to well-defined protocols and is often executed in batch-driven cycles. Two primary methodologies for data loading are employed:

Full Load: The full load method entails the transfer of the entire dataset from the source to the target data warehouse. Typically enacted during the initial data migration phase, this approach ensures comprehensive data synchronization between the source and target systems.

Incremental Load: Incremental loading involves the extraction and transfer of only the incremental changes or “delta” between the source and target systems at regular intervals. This method optimizes efficiency by selectively updating the dataset based on recent modifications. There are two predominant strategies for implementing incremental loading:

Streaming Incremental Load: Ideal for scenarios with relatively small data volumes, streaming incremental loading involves the continual transmission of data changes via dedicated pipelines to the target data warehouse. Leveraging event stream processing, this method facilitates real-time monitoring and processing of data streams, enabling prompt decision-making in response to evolving datasets.

Batch Incremental Load: Suited for scenarios characterized by large data volumes, batch incremental loading entails the periodic collection and transfer of data changes in predefined batches. During these synchronized intervals, both source and target systems remain static to ensure seamless data synchronization. This approach balances the computational overhead associated with processing large datasets while optimizing data consistency and integrity.

Through the meticulous execution of data loading procedures, ETL tools orchestrate the seamless transfer of transformed data into the target data warehouse, thereby furnishing organizations with a unified and comprehensive repository primed for advanced analytics, decision-making, and strategic insights.

Understanding ELT

Extract, Load, and Transform (ELT) represents a paradigm shift from the traditional Extract, Transform, and Load (ETL) methodology by reversing the sequence of operations. In ELT, data is extracted from source systems, loaded directly into the target data warehouse, and subsequently transformed within the warehouse environment. The need for an intermediate staging area is obviated as modern target data warehouses possess robust data mapping capabilities, facilitating in-situ transformations.

Comparison of ETL and ELT:

ETL:

- Involves extracting data from source systems.

- Transforms the data externally in an intermediate staging area.

- Loads the transformed data into the target data warehouse.

- Requires upfront planning and definition of transformations.

ELT:

- Extracts data from source systems.

Loads the raw data directly into the target data warehouse.

Transforms the data within the warehouse environment.

Capitalizes on the processing power of the target database for transformations.

Advantages of ELT

- Well-suited for high-volume and unstructured datasets that necessitate frequent loading.

- Ideal for big data scenarios, enabling post-extraction analytics planning.

- Minimizes upfront transformation requirements, focusing on loading the warehouse with minimal processed raw data.

- Particularly advantageous in cloud environments, leveraging the processing capabilities of target databases.

The Transition from ETL to ELT

Historically, ETL processes required extensive upfront planning and involvement of analytics teams to define target data structures and relationships.

Data scientists primarily utilized ETL to migrate legacy databases into data warehouses.

With the advent of cloud infrastructure and the rise of big data, ELT has gained prominence as the preferred approach for data integration and analytics.

ELT represents a flexible and efficient approach to data integration, enabling organizations to leverage the scalability and processing power of cloud-based target data warehouses while streamlining the data transformation process.

Understanding Data Visualization

Data virtualization is a data integration approach that leverages a software abstraction layer to create a unified and integrated view of data without physically removing, altering, or importing the data manually into a separate repository. By employing this method, organizations can establish a virtualized data repository that provides a cohesive representation of disparate data sources, eliminating the need for separate platforms for data storage and integration. This approach offers several advantages, including reduced complexity and cost associated with building and managing separate data platforms.

While data virtualization can be used in conjunction with traditional extract, transform, and load (ETL) processes, it is increasingly recognized as an alternative to physical data integration methods. For instance, AWS Glue Elastic Views enables users to swiftly generate virtual tables, or materialized views, from multiple heterogeneous source data stores, facilitating seamless data access and analysis.

Understanding AWS Glue

Amazon Web Services (AWS) provides a serverless data integration solution called AWS Glue that simplifies the process of locating, preparing (making the process ready), relocating, and integrating from diverse sources for analytics, machine learning, and application development purposes. Key features of AWS Glue include:

- Connectivity to 80+ different data stores, enabling seamless access to a wide array of data sources.

- Centralized data catalog management, facilitating efficient organization and discovery of data assets.

- AWS Glue Studio, which empowers data engineers, ETL developers, data analysts, and business users to create, execute, and monitor ETL pipelines for loading data into data lakes.

- Versatile interface options, including Visual ETL, Notebook, and code editor interfaces, catering to users with varying skill sets and preferences.

- Interactive Sessions feature, allowing data engineers to explore and manipulate data interactively using their preferred integrated development environment (IDE) or notebook environment.

- Serverless architecture that automatically scales based on demand, enabling seamless handling of petabyte-scale data without the need for manual infrastructure management.

In essence, AWS Glue and data virtualization represent innovative approaches to data integration and management, offering organizations streamlined and scalable solutions for leveraging their data assets to drive insights and innovation.

Final Thoughts

ETL (Extract, Transform, Load) plays a pivotal role in modern data management and analytics ecosystems. Through its iterative process of extracting raw data from diverse sources, transforming it into a standardized format, and loading it into a target repository, ETL facilitates the seamless flow of information critical for informed decision-making and strategic planning.

While traditional ETL methodologies have long been the cornerstone of data integration, the emergence of alternatives like ELT (Extract, Load, Transform) and data virtualization underscores the evolving landscape of data management, offering organizations new avenues for efficiency and agility. As technologies such as AWS Glue continue to evolve, the future of ETL promises to be increasingly dynamic, empowering businesses to harness the full potential of their data assets for growth and innovation.